About Me

I am a graduate ECE student at Northeastern University and a research engineer in the Traverso Lab at Brigham and Women's Hospital and the Massachusetts Institute of Technology (MIT). My research interests include machine learning, computer vision, and embedded firmware. My research explores the boundary between software and hardware in search of novel solutions within the field of individualized medicine.

I am passionate about helping others, particularly disadvantaged youth, break into STEM. I believe that science is fundamentally elegant and interconnected and having diverse perspectives in research and industry is essential to continued innovation and progress.

Please feel free to reach out if you'd like to connect!

Details

My Engineering Journey

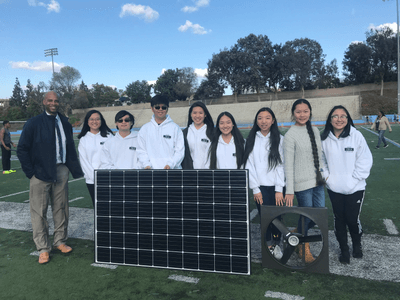

SOLAR POWERED FAN

My fascination with engineering was sparked during my high school years when I had the privilege of teaming up with SunSpark Technology to develop a portable solar-powered fan. This project not only instilled in me a deep appreciation for design but also fueled my fervor for making a meaningful impact through my work. Collaborating with seasoned industry experts, we spearheaded the installation of Walnut High School's inaugural solar power systems, paving the way for fellow students to take up the cause of sustainable innovation.

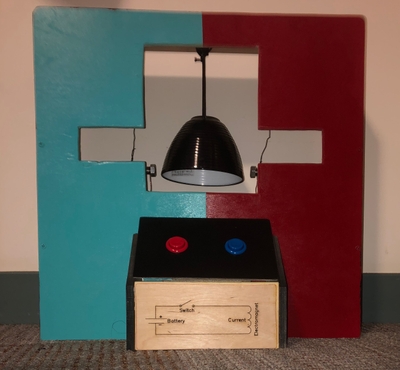

ELECTROMAGNETIC BELL

During my freshman year, I had the opportunity to engage in a stimulating interdisciplinary collaboration with fellow electrical and mechanical engineers. Our goal was to create an interactive and portable exhibit for a children's STEM museum, with a modest budget of $100. After careful consideration, we decided to showcase the fascinating relationship between electricity, magnetism, and physical reactions by designing an electromagnetic bell. Throughout the project, we worked closely together, taking into account our individual skill sets, the limited budget and timeframe, as well as the needs and interests of our intended audience. By doing so, we were able to create a thoughtfully designed and engaging exhibit that not only demonstrated scientific concepts, but also sparked curiosity and excitement in young minds.

PROJECT MOTION

My 6-month capstone project during my senior year of college was the culmination of my education and experiences. My team and I developed a drone platform for autonomous infrastructure inspection in GPS-denied environments using transfer learning and the Mask R-CNN framework. We equipped our platform with sensors and cameras that captured RGB-D images, and our machine learning algorithms used image segmentation to detect and localize defects and anomalies with remarkable accuracy. Our solution impressed the judges in the annual engineering capstone competition, earning us first place and demonstrating how cutting-edge technologies can have a meaningful impact on society.

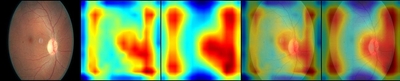

VISION-DR - Visual Insights and Saliency Integrated Overlay Neural Network for Diabetic Retinopathy

My team and I developed a deep learning model, VISION-DR, that uses saliency maps to provide visual insights into the decision-making process of a convolutional neural network (CNN) for diabetic retinopathy (DR) detection. Our model not only achieved state-of-the-art performance on the Kaggle Diabetic Retinopathy Detection dataset, but also provided visual explanations for its predictions, making it more interpretable and trustworthy for medical professionals. By combining the power of deep learning with visual analytics, we hope to improve the accuracy and reliability of DR diagnosis, ultimately saving lives and reducing healthcare costs.

FROGS - Fine Resolution Optimization and Gradient Smoothing

My team developed an image generation pipeline that combined a stable diffusion and super resolution model to generate high-quality, high-resolution images from text. Our model, FROGS, can run natively on memory limited systems by generating multiple overlapping quadrants and using smoothing techniques to blend them together. We also applied gradient smoothing techniques that allow for the generation of high-quality images with minimal artifacts. Our model was able to generate high-quality images with arbitrary resolution, including 3840 x 2160 images, from text descriptions.